Artificial intelligence has significantly transformed scientific computing in recent years, and this trend of convergence between AI and high-performance computing (HPC) is continuing to grow, according to Ian Buck, vice president and general manager of Nvidia’s hyperscale and HPC computing division, in an interview with EE Times. This shift is driving increased demand for GPUs in the HPC space.

“The HPC and supercomputing communities are now fully aware of AI’s transformative potential,” Buck said. “The advantage is that AI runs on the same GPUs we already use. Nvidia intentionally designs a single GPU architecture that serves all of our users and markets.”

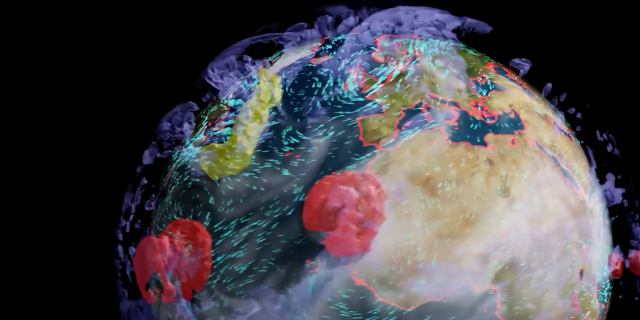

Supercomputers are advancing scientific research across numerous disciplines. Today, many use physics-based simulations to model complex phenomena that can’t be easily observed through experiments. Climate change is a prime example, given the massive scale and long timescales involved, which make real-world experiments impractical—so researchers turn to simulations.

“When we understand the physics—whether it’s turbulent airflow or solar radiation—and we have the computing power, we can build a mathematical model of the planet and essentially ‘hit play,’” Buck explained. “These simulations can represent processes that unfold over years, decades, or even centuries.”

With the help of supercomputers, researchers are able to simulate carbon emissions over extended periods and observe the resulting impacts.

According to Ian Buck from Nvidia, one of the biggest hurdles is ensuring accuracy and having sufficient computational power to run these detailed models. He noted that supercomputing often involves striking a balance between detail and practicality—since it’s not feasible to simulate down to the atomic level, scientists must rely on approximations and then work to validate those models.

In climate research, scientists can test models against historical data, but simulating conditions at a global scale remains extremely challenging. For example, accurately modeling cloud formation requires resolving processes at sub-kilometer levels—such as turbulent eddies that occur on the scale of hundreds of meters. While increasing computational resources can improve simulation accuracy, that’s not always feasible. In those cases, another approach is to train AI models to observe and approximate the simulation.

These AI models can run much faster than traditional simulation algorithms. Although they still require thorough validation, they provide researchers with a powerful tool to explore a broader range of scenarios over longer timescales. AI can help detect patterns or phenomena that might be missed due to computational limits, guiding researchers to areas worth further investigation using full physics-based simulations.

Many of today’s new supercomputers featuring Nvidia’s Grace Hopper CPU-GPU architecture and Hopper GPUs are designed to support this kind of AI surrogate training and inference. Nvidia itself is building Earth-2, a supercomputer dedicated to running a digital twin of the planet for climate modeling. Earth-2 will combine GH200 (Grace Hopper), HGX H100 (Hopper AI GPUs), and OVX (Ada Lovelace GPUs for graphics and AI) systems.

Nvidia’s Earth-2 supercomputer will run a digital twin of the planet at unprecedented resolution to help advance climate change research.

(Source: Nvidia)

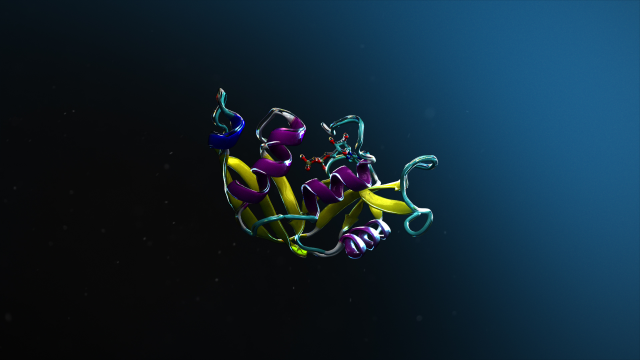

AI surrogate models are also being applied at the molecular level to study protein folding and understand how biological molecules, such as viruses, function. Simulating the interaction between viruses and potential drugs is complex due to the need for very short time steps over extended periods. This makes traditional simulations computationally expensive and time-consuming. However, AI surrogates can approximate these processes much faster, enabling researchers to accelerate simulations and explore more therapeutic possibilities in less time.

Protein folding is one of several areas of scientific computing that can make use of AI surrogates to speed up scientific discovery.

(Source: Nvidia)

AI is increasingly being used to enhance existing scientific processes. One key application is in developing AI-based preconditioners, which help scientists solve complex mathematical equations more efficiently. These preconditioners transform a matrix of equations into a form that is easier for numerical solvers to handle—an approach that remains numerically exact but significantly accelerates computation.

Preconditioners are commonly used in computer-aided engineering tasks, such as simulating vehicle crash scenarios. AI can automate and improve the process of creating these preconditioners, making it more accessible and scalable across a variety of scientific domains.

Nvidia supports these advances with workflows designed for building AI surrogate models, as well as tools like its Modulus framework, which allows for training AI using physical laws. It also provides specialized foundation models like BioNeMo for drug discovery applications.

The architecture of supercomputers is also evolving to meet the demands of AI and accelerated computing. A major shift is happening in how CPUs and GPUs communicate. Traditional systems connected CPUs and GPUs over PCIe, offering limited bandwidth. Nvidia’s Grace Hopper superchip represents a significant leap forward, integrating CPU and GPU into a unified system with a high-bandwidth connection—450 GB/s in one direction, or 900 GB/s total.

This tighter integration also brings cache coherence, meaning both the CPU and GPU can access shared memory seamlessly. This drastically reduces the complexity of managing data movement, allowing the system to automatically and efficiently manage memory pages. With both processing units effectively operating as a single entity, developers can focus less on data logistics and more on advancing their computational goals.

As Nvidia continues to lead in large-scale AI development, innovations like Grace Hopper are expected to enable faster, more energy-efficient computation. These improvements will not only speed up simulations and discoveries but also pave the way for breakthroughs across fields such as climate science, healthcare, and engineering.