High-Performance Computing (HPC), built to handle demanding computational tasks, is enabling researchers in the life sciences and medical fields to obtain results more quickly and cost-effectively. When integrated with accelerated computing, artificial intelligence (AI), high-bandwidth memory (HBM), and other advanced memory technologies, HPC significantly enhances the speed and efficiency of drug discovery efforts.

According to a recent MarketsandMarkets report, the global HPC market is projected to grow from $36 billion in 2022 to $49.9 billion by 2027. A major factor driving this growth is genomics research, which involves analyzing genetic data to identify disease-linked variations and understand individual responses to treatments. The report highlights that HPC systems have led to improvements in the speed, precision, and dependability of genomic analysis.

Accelerated Computing

Accelerated computing employs specialized processors—such as graphics processing units (GPUs)—to execute computations more efficiently, delivering faster performance than systems relying solely on central processing units (CPUs).

By combining traditional CPU-based infrastructure with accelerators like Nvidia GPUs, accelerated computing enhances workload execution. According to Dion Harris, Director of Accelerated Computing at Nvidia, the HPC community is increasingly adopting this approach to achieve gains in both processing speed and energy efficiency.

Energy Efficiency and Cost Benefits of Accelerated Computing

Calculations can now be performed with significantly less energy consumption due to the speed at which workloads are processed, noted Dion Harris, Director of Accelerated Computing at Nvidia. This energy efficiency is especially important as data centers face growing constraints on power usage.

Harris also emphasized the cost-effectiveness of accelerated computing. Although adding GPUs increases the initial infrastructure costs, the performance gains and energy savings far outweigh the added expense. In terms of overall system throughput per dollar, accelerated computing often results in better economics and a more efficient data center footprint, he said.

This is a key reason why accelerated computing is seeing broad adoption in high-performance computing (HPC) environments, especially for applications in medicine and drug discovery.

Nvidia’s Role and Ecosystem in Accelerated Computing

Nvidia has been a pioneer in accelerated computing for over a decade, collaborating with researchers to transition from CPU-only architectures to GPU-accelerated solutions. Their efforts have expanded across multiple domains, including drug discovery, materials science, quantum chemistry, seismic modeling, and climate simulation.

As Harris explained, a robust application ecosystem has emerged, enabling supercomputer designers to maximize value by incorporating GPU-optimized code into their systems. This has made GPU acceleration a standard for high-throughput, cost-efficient workloads.

Heterogeneous Computing and CPU Selection

According to AMD, building an effective HPC system starts with understanding the specific requirements of your workloads and identifying whether they’re better suited to CPUs or GPUs. Most current HPC architectures rely on heterogeneous computing, which combines both CPUs and GPUs, though some systems still operate with CPUs alone.

When choosing a CPU, AMD advises users to assess:

- Core scalability

- Memory dependency

- PCIe lane requirements

For HPC applications, performance typically scales with core count and memory bandwidth, so CPUs with high core density and greater memory throughput tend to deliver better results.

High-Bandwidth Memory (HBM) and its Growing Importanc

Memory remains a critical bottleneck in HPC. As data sizes increase, there’s a growing demand for both larger capacity and higher bandwidth memory. AMD predicts that HBM and its variants will become increasingly vital—particularly in GPU-heavy workloads, where performance hinges on rapid data transfer.

AMD’s upcoming Instinct MI300X accelerator, designed for generative AI and LLMs, will include 192 GB of HBM3, making it well-suited for large-scale, bandwidth-intensive applications.

AI + HPC: Transforming Possibilities

Artificial intelligence is now being integrated into traditional HPC simulations, enhancing both speed and scope. According to Harris, the value of this fusion goes beyond cost and efficiency—it’s about achieving breakthroughs that were previously impossible.

AI is accelerating some tasks by factors of 1,000× to 10,000×, offering radically shorter timeframes for gaining insights from data. This is particularly transformative in areas like drug discovery, where a process that usually spans over a decade and billions of dollars can now be significantly shortened.

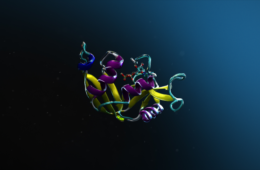

One key step in drug development is molecular docking, which involves evaluating how a drug molecule binds with a target protein. Traditionally a lengthy computational task, docking can now be compressed from a year-long process to just a few months using AI.

Nvidia saw this in practice during the COVID-19 pandemic, when AI-powered docking tools helped accelerate solution identification. Another example is sequencing viral structures, a process that has been revolutionized by tools like AlphaFold. AlphaFold uses AI techniques common in large language models (LLMs) to predict protein structures thousands of times faster than cryo-electron microscopy.

The Role of CPUs, GPUs, and Cloud in AI + HPC

Major tech companies like AMD, Intel, and Nvidia are now designing processors specifically for AI and HPC workloads, with many of these solutions leveraging cloud environments.

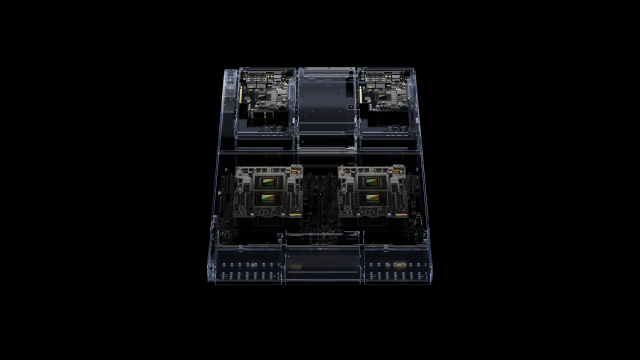

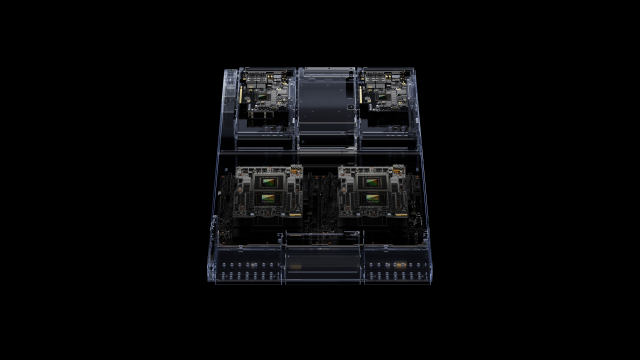

One standout innovation is the Nvidia Grace Hopper Superchip—an integrated CPU-GPU platform designed for large-scale AI and HPC tasks. These components are tightly linked via NVLink-C2C, enabling high-bandwidth data transfer and giving GPUs efficient access to large memory pools.

This architecture is particularly beneficial for graph neural networks used in drug screening and compound analysis. By supporting larger models and more complex computations, the Grace Hopper system enables more accurate and valuable results in AI inferencing.

Nvidia’s GH200 Grace Hopper platform, based on a new Grace Hopper Superchip with the industry’s first HBM3e processor, is designed for accelerated computing and generative AI.

(Source: Nvidia)

Dion Harris of Nvidia explained that the Grace Hopper Superchip is ideal for applications that require processing on both CPUs and GPUs, offering the flexibility to dynamically balance workloads. It also enables gradual migration to full GPU acceleration—even for applications that haven’t yet been fully optimized for GPUs—using the same development tools and platform.

Grace Hopper includes dynamic power shifting, which reallocates power between the CPU and GPU depending on workload demands. For instance, within a fixed power envelope (e.g., 650 watts), the system could allocate 550 watts to the GPU for AI-heavy tasks and only 100 watts to the CPU, providing seamless adaptation for diverse application needs.

While Nvidia strongly supports accelerated computing as the future for most workloads, Harris acknowledged that some workloads remain CPU-centric. For those cases, Nvidia has introduced the Grace CPU, based on the Arm Neoverse V2 architecture, delivering strong performance with high energy efficiency.

The University of Bristol is among the first institutions to deploy the Nvidia Grace CPU Superchip for multi-domain research, including drug discovery. Its new Isambard 3 supercomputer, hosted at the Bristol & Bath Science Park, will use 384 Grace CPU Superchips and is projected to deliver six times the performance and energy efficiency of its predecessor, Isambard 2. The system will reach 2.7 petaflops of FP64 peak performance while consuming under 270 kilowatts of power.

Isambard 3 will contribute to projects such as simulating molecular mechanisms to better understand Parkinson’s disease and support research on osteoporosis and COVID-19.

Nvidia’s HPC platforms are also available via the cloud, allowing researchers to run their CUDA-based applications on-premises or in cloud environments. Harris pointed to a rising trend in HPC shifting to the cloud.

Nvidia recently launched a suite of generative AI cloud services, part of its AI Foundations portfolio, to accelerate drug discovery and research in genomics, chemistry, biology, and molecular dynamics. One key offering is BioNeMo Cloud, which supports both training and inference of AI models.

BioNeMo enables researchers to customize generative AI models using proprietary data, run AI inference via a web browser or APIs, and use pre-trained models to build AI pipelines for drug discovery.

Early adopters such as Evozyne and Insilico Medicine are leveraging BioNeMo for data-driven drug design. These models can predict 3D protein structures, assess molecular docking, and even generate novel compounds or therapeutic proteins from scratch.

AMD’s adaptive computing and AI solutions are also powering breakthroughs in medical research, offering high performance and energy efficiency for HPC deployments. The Frontier supercomputer at Oak Ridge National Laboratory, powered by AMD EPYC CPUs and Instinct GPUs, achieves 1.194 exaflops of performance and is regarded as the world’s fastest and most energy-efficient.

Frontier supports research in various domains, including the CANDLE initiative, which uses predictive modeling to streamline cancer treatment trials, potentially saving years in drug development time.

AMD’s Instinct MI250X GPUs and EPYC processors also top the HPL-MxP mixed-precision benchmark, with Frontier scoring 9.95 exaflops and LUMI achieving 2.2 exaflops, demonstrating how HPC and AI are converging to power climate and cancer research.

Microsoft Azure, powered by AMD EPYC processors, is being used by the Molecular Modelling Laboratory (MML) to accelerate computational drug development. MML’s CEO, Georgios Antipas, emphasized the value of cloud-based HPC for scaling quantum chemical modeling and AI workloads, significantly reducing time and cost in early-stage drug development.

MML adopted 3rd-generation AMD EPYC CPUs for their high core density and clock speed, which translated into faster simulation times and more efficient drug formulation.

AMD’s 4th-generation EPYC family offers workload-optimized compute solutions. The EPYC 9004 Series (Genoa) delivers high performance and energy efficiency for a broad range of HPC tasks, offering up to 96 cores. The EPYC 97×4 Series (Bergamo) is the first x86 CPU built for cloud-native computing, leveraging the Zen 4c architecture for high thread density and scalability. The EPYC Genoa-X features 3× more L3 cache via 3D V-Cache technology, ideal for technical computing workloads where large datasets and memory bandwidth are critical.

According to AMD, Genoa-X’s design alleviates memory bottlenecks and accelerates processing, boosting performance in data-intensive scientific applications.